FAQ

Your Frequently Asked Questions will pop up here, check back in frequently!

Errors and troubleshooting

Common issues

Problems When connecting or transferring data

How to solve Connection timed out message when connecting to MeluXina error?

- Ensure that you are using the correct port (8822), e.g.

ssh yourlogin@login.lxp.lu -p 8822 - Ensure that your organization / network settings are not blocking access to port 8822

- Ensure that you are connecting to the master login address (login.lxp.lu) and not a specific login node (login[01-04].lxp.lu) as it may be under maintenance

- Check the MeluXina Weather Report for any ongoing platform events announced by our teams

How to solve Permission denied when using ssh yourlogin@login.lxp.lu -p 8822?

- Ensure that you're using the SSH key which was shared to the ServiceDesk portal and registered to your MeluXina account

- Ensure that you have added your SSH key to your SSH agent, with the

ssh-add ~/.ssh/id_ed25519_mluxcommand (you might need first to restart your SSH agent with theeval "$(ssh-agent -s)"command) - Ensure that your SSH key is located in the appropriate folder (maybe your SSH key is not in the default folder

.ssh/and / or is not exposed by your SSH agent) - Your registered SSH key might not be stored on your current device. This may happen while trying to connect from another computer or when tentatively bouncing from another HPC cluster to MeluXina : kindly remember that the SSH key that you currently use might not be registered into your MeluXina account.

How to solve Too many authentication failures when using ssh yourlogin@login.lxp.lu -p 8822?

- You may have too many SSH keys (more than 6) on your device. To ensure that you point to the appropriate SSH key, you can specify it with the

-Identityoption in your connection command : e.g.ssh yourlogin@login.lxp.lu -p 8822 -i ~/.ssh/id_ed25519_mlux -o "IdentitiesOnly yes". You can also specify theIdentityFile ~/.ssh/id_ed25519_mluxandIdentitiesOnly yesdirectives in your.ssh/configfile

In any case, we kindly suggest you to follow our connection guidelines in the "First Steps/Connecting" section available in this documentation.

.ssh/config file as described at the following link : https://docs.lxp.lu/first-steps/connecting/#connect-to-meluxina

-vvv option to your connection command, which will generate more output and might help you to identify and auto-resolve your issue.

How to solve Failed setting locale from environment variables when using ssh yourlogin@login.lxp.lu -p 8822?

- You may be using a special locale, try connecting with

LC_ALL="en_US.UTF-8" ssh yourlogin@login.lxp.lu -p 8822

Problems with jobs that do not start

How to solve Job submit/allocate failed: Invalid account or account/partition combination specified when starting a job with sbatch or salloc?

- Ensure that you are specifying the SLURM account (project) you will debit for the job, with

-A ACCOUNTon the command line or#SBATCH -A ACCOUNTdirective in the launcher script

How to solve Job submit/allocate failed: Time limit specification required, but not provided when starting a job with sbatch or salloc?

- Ensure that you are providing a time limit for your job, with

-t timelimitor#SBATCH -t timelimit(timelimit in the HH:MM:SS specification)

What to do if my job is not starting/stays pending?

- Jobs will wait in the queue with a PD (Pending) status until the SLURM job scheduler finds resources corresponding to your request and can launch your job, this is normal. In the

squeueoutput, the NODELIST(REASON) column will show why the job is not yet started. -

Common job reason codes:

- Priority, One or more higher priority jobs are in queue for running. Your job will eventually run, you can check the estimated StartTime using

scontrol show job $JOBID. - AssocGrpGRES, you are submitting a job to a partition you don't have access to.

- AssocGrpGRESMinutes, you have insufficient node-hours on your monthly compute allocation for the partition you are requesting.

- Priority, One or more higher priority jobs are in queue for running. Your job will eventually run, you can check the estimated StartTime using

-

If the job seems not to start for a while check the MeluXina Weather Report for any ongoing platform events announced by our teams, and if no events are announced, raise a support ticket in our ServiceDesk

Problems when running jobs

What to do if I get -bash: module: command not found when trying to browse the software stack or load a module

- Ensure you are not trying to run module commands on login nodes (all computing must be done on compute nodes, as login nodes do not have access to the EasyBuild modules system)

- Ensure that your

.shlauncher script starts with#!/bin/bash -l(lowercase L), which enables the modules system

My job cannot access a project home or scratch directory, and it used to work

- Ensure that project folder's permissions (

ls -l /path/to/directory) have not been changed and allow your access - Check the MeluXina Weather Report for any ongoing platform events announced by our teams (especially for the Data storage category)

My job is crashing, and it used to work

- Ensure your environment (software you are using, input files, way of launching the jobs, etc.) has not changed

- Ensure you have kept your software environment up-to-date with our production software stack releases

- Ensure that you still have some space left in your home directory (see below)

- Check the MeluXina Weather Report for any ongoing platform events announced by our teams

- Raise a support ticket in our ServiceDesk and we will check together with you

I get an error message like OSError: [Errno 122] Disk quota exceeded

- Type

myquotain your terminal when connected to a node (login or compute). Your home directory might be full. - You might want to know which directories take more of the disk space

- Sometimes, some of the space-conuming directories are hidden. This is the case for instance if you load a large pre-trained huggingface model without specifying where to store the model. Another example is

pipwhich will by default store the installed packages in your home directory. -

You can do the following to better understand the disk usage of your home directory:

(login/compute)$ cd $home (login/compute)$ ncdu -

If you are using Python and installing several packages via

pip, be careful about where packages are being installed. Have a look below on how to handle where does pip install packages. See the dedicated point below. - If you use

HuggingFaceor similar tool and use pre-trained model, verify where the model shards are being downloaded. See our example here on how to change the directory where shards are stored for later use.

How do I change where does pip installs modules?

In the case where you do not want to use a venv, pip might quickly fill the space available in your $HOME directory when installing modules as it is the default installation location. If you want to avoid this situation, you can change the directory in which pip install packages by default.

First, add the directory in which you want to your $PYTHONPATH. If you want this to be permantenly the case, in your ~/.bashrc file do:

export DIR_PIP_INSTALL="/project/home/pxxxxxx/dir_where_you_want_pip_to_install/"

export PYTHONPATH=$DIR_PIP_INSTALL:$PYTHONPATH

Do not forget to source ~/.bashrc once your modifications are done and to verify that the $PYTHONPATH variable contains the target directory we have set up. Then, you should be able to install the package you want in the directory of your choice by doing:

pip install --target=$DIR_PIP_INSTALL hdf

Important note:

In the case where you have critical IO operations and you have access to the scratch partition, do not hesitate to install the IO related modules in this partition to have some noticeable speedups for read/write operations. As an example:

pip install --target=$MYDIRINSCRATCH hdf

Of course, you should have defined $MYDIRINSCRATCH beforehands and added to your $PYTHONPATH. You can prepend it to your permanent $PYTHONPATH as above:

export DIR_PIP_INSTALL="/project/home/pxxxxxx/dir_where_you_want_pip_to_install/"

export MYDIRINSCRATCH="path_to_your_dir_in_scractch"

export PYTHONPATH=$MYDIRINSCRATCH:$DIR_PIP_INSTALL:$PYTHONPATH

What to do if my python script does not work (anymore) and I get message like python: error while loading shared libraries: libpython3.ym.so.1.0: cannot open shared object file: No such file or directory or if I get some ModuleNotFoundError

Our different stacks provides different python versions so it is easy to refer to mix them up. Let's analyse a problematic workflow.

ml env/release/2023.1

ml Python/3.10.8-GCCcore-12.3.0

ml cuDF/23.10.0-foss-2023a-CUDA-12.2.0-python-3.10.8

cudf should work seamlessly:

python -c "import cudf"

Let's now import cuDF from an IPython console. We start by loading the IPython module.

ml IPython/8.14.0-GCCcore-12.3.0

The following have been reloaded with a version change:

1) Python/3.10.8-GCCcore-12.3.0 => Python/3.11.3-GCCcore-12.3.0

Modules warns me that the Python module has been reloaded, and this will be the rootcause of our troubles. Typing ipython launches an interactive python session.

Python 3.11.3 (main, Nov 13 2023, 00:27:08) [GCC 12.3.0]

Type 'copyright', 'credits' or 'license' for more information

IPython 8.14.0 -- An enhanced Interactive Python. Type '?' for help.

As you can see from the prompt, ipython uses Python 3.11.3 but our cuDF module is dependent on Python 3.10.8! This is why when we try to import the cuDF module, an ImportError is raised:

In [1]: import cudf

---------------------------------------------------------------------------

ModuleNotFoundError Traceback (most recent call last)

The bottom line is: ensure that you are consistently using the same Python version throughout your workflow. Pay particular attention to pip, as it installs packages linked to a specific Python binary.

Trickier runtime problems and performance issues

My IntelMPI job hangs and I don't know why

If your application compiled with IntelMPI hangs with srun or mpirun during parallel execution, change OFI provider to verbs or psm3 or load libfabric module (will change the default OFI provider to psm3):

* export I_MPI_OFI_PROVIDER=verbs or export I_MPI_OFI_PROVIDER=psm3

* module load libfabric

My multi-gpu-nodes job shows slow bandwidth

- If experiencing low bandwidth when using MPI with GPUs, the following variable might help increase bandwidth:

UCX_MAX_RNDV_RAILS=1. See the following link here for more details. - If experiencing low bandwidth when using NCCL with GPUs, the following variable might help increase bandwidth:

NCCL_CROSS_NIC=1. See the following link here for more details.

MPI/IO abnormally slow with OpenMPI.

OMPIO is included in OpenMPI and is used by default when invoking MPI/IO API functions starting with 2.x versions. However, OMPIO has proven to sometimes lead to severe bugs, data corruption and performance issues. Use OMPI_MCA_io=romio321 variable to switch to ROMIO component of the io framework in OpenMPI

Open MPI's OFI driver detected multiple equidistant NICs from the current process message when using MPI code.

This warning can be ignored, this will be solved in a future PMIx update

mm_posix.c:194 UCX ERROR open(file_name=/proc/9791/fd/41 flags=0x0) failed: No such file or directory when running MPI programs compiled with OpenMPI.

This problem will be solved by exporting the environment variable: export OMPI_MCA_pml=ucx.

My job fails with psec and munge error when running a container with different MPI configurations than MeluXina

Typically, Slurm relies on the munge library for establishing client-server connections. In this situation, both Slurm and PMIx recognized the munge library during their build process. However, it seems that the PMIx/OpenMPI from the container could not get/see the library on the compute nodes where your process is currently executing. You can instruct PMIx to ignore it or make use of the native built-in authentication mechanisms:

PMIX_MCA_psec=native

My job fails with the error ib_mlx5_log.c:171 Transport retry count exceeded on mlx5_1:1/IB (synd 0x15 vend 0x81 hw_synd 0/0)

It typically indicates that the network adapter repeatedly attempted to send a packet without success and timed out. Here are the common causes for this issue:

-

Hardware Malfunctions: Failures can occur in InfiniBand adapters or switches, potentially cutting off connections and stopping data transfers to or from certain endpoints.

-

Fabric Congestion: Sometimes the network can become congested, causing adapters to retry sending packets. If the congestion persists, the retries may exceed the limit, leading to the application crashing.

-

Changes in the Network Fabric: Significant alterations in the network, such as servicing racks, can disrupt the routing tables of switches temporarily. If your data transfer uses affected links, it may result in reaching the retry limit, causing a crash.

-

Asynchronous Communication Management: This cause is less frequent but can occur if there are many simultaneous transmissions.

In most instances, simply resubmitting your job may resolve the problem. However, if the problem persists, your code might be particularly sensitive to these issues. Adjusting the UCX_RC_TIMEOUT variable, which controls the retry interval, may help. You can check its default setting with ucx_info -f. If issues continue, consider alternative transports like UD or DC (default is RC).

Poor performance or performance degradation at large scale with MPI (OpenMPI or ParastationMPI runtimes)

Some MPI jobs might perform poorly at large scale (more than 8 nodes sometimes) and have a very bad strong scaling. If you have noticed this for your jobs, this is probably related to UCX and the selected transport modes. See here for more details on our available MPI runtimes.

From UCX documentation: On machines with RDMA devices, RC transport will be used for small scale, and DC transport (available with Connect-IB devices and above) will be used for large scale. If DC is not available, UD will be used for large scale.

DC transport performs poorly on MeluXina and we recommend to use UD transport at large scale (more than eight (8) nodes):

module load UCX-settings/UD (or module load UCX-settings/UD-CUDA on GPU nodes)

or

export UCX_TLS=ud_x,self,sm (or export UCX_TLS=ud_x,self,sm,cuda_ipc,gdr_copy,cuda_copy on GPU nodes)

Misc

Citing us

How to acknowledge the fact that I used Meluxina?

-

In publications

Add us to your publications' acknowledgement sections using the following template:

Acknowledgement template

The simulations were performed on the Luxembourg national supercomputer MeluXina.

The authors gratefully acknowledge the LuxProvide teams for their expert support. -

Citing LuxProvide

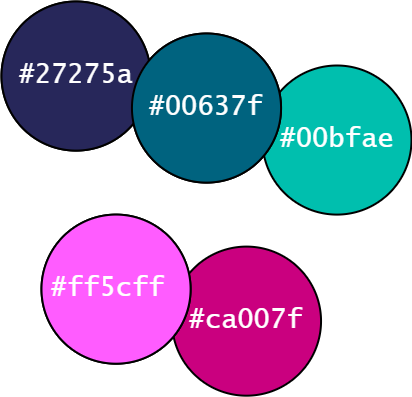

- Use the Luxprovide logo and the LuxProvide color palette:

Logo Color Palette

-

Citing MeluXina

- Use the MeluXina logo and the MeluXina color palette:

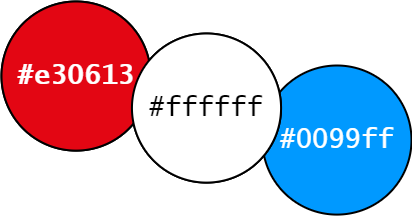

Logo Color Palette